Methodology of System

The aim of this project is to develop a class attendance system based on face recognition. This includes the study of development of biometric technology, face recognition and detection. The development of this project consists of five main parts which will be discussed later in this chapter.

Project Overview

Figure 3.3: System overview

Figure 3.3 shows an overview of this system. The input image which is capture using CCTV camera is transferred to MATLAB programming for the student recognition process. If the system recognized the student, their attendance will be marked.

System Development

The system is developed using Computer vision toolbox in MATLAB. A Graphical User Interface is developed to provide an interaction to user through Graphical button and icons. The following are the main step to implement this project.

- Student registration

- Image Acquisition

- Face detection

- Face recognition

- Attendance

Student Registration

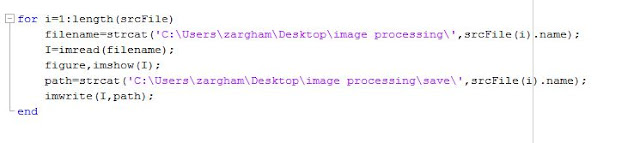

First step in every biometric system [24] is the enrollment or registration of student using general data and their unique biometric features as templates. This work uses the enrollment algorithm as shown in the Figure 3.4. Image is captured from the CCTV camera and then it is enhanced by removing noise. In the second step face is detected in the image and features are extracted from it. These unique features are then stored in the face database.

Figure 3.4: Registration Process [5].

Image Acquisition

In Image Acquisition step, the input image is captured through CCTV camera which consists of students’ faces. Once the input image is captured then the students’ faces will be detected and recognized for the purpose of marking their attendance.

Face Detection

Face detection is a complex problem of images processing that identifies human faces in image and videos. Face detection is a necessary step in face recognition systems, with the purpose of localizing and extracting the face region from the background. Many methods exits to solve this problem such as Skin based face detection, AdaBoost method, Neural network, SVM and MRC. Amongst all of them we have implemented Viola-Jones face detection algorithm which is very robust algorithm and it has a very high detection rate. It is a real time face detection algorithm and it must process at least two frames per second for practical applications.

The following are the four main parts of Viola-Jones face detection algorithm.

- Haar-like Features

- Integral Image

- AdaBoost method

- Cascading

Haar-Like Features

Haar features are similar to convolution kernels which are used to detect the presence of that feature in the given image. Viola-Jones face detection algorithm uses approximately 16,000 plus haar features. Five of these haar features which are mostly used by algorithm are shown in figure 3.9.

Figure 3.9: Haar features used in Viola-Jones

Each feature is applied to an image by following some facial features characteristics. Each haar feature will result a single value which is calculated by subtracting the sum of all pixels under a white rectangle from the sum of all pixels under a black rectangle.

The following figure 3.10 shows the haar features applied to input image. In this figure two haar features are applied to input image according to the characteristics of facial features.

Figure 3.10: Applying Haar feature to input image

All these Haar features have some sort of resemblance to facial features. The Viola-Jones face detection algorithm uses 24 by 24 resolution based windows to evaluate these features in any given image. In the first 24 by 24 resolution window the algorithm will evaluate all the haar features and output a single value and this process continues until it reach the end of input image. Calculating these huge set of haar features create a problem and it look practically very difficult and impossible for real time face detection. So the basic idea is to eliminate the redundant features which are not useful and select only those features which are useful. To overcome on this problem we introduce the concept known as AdaBoost which eliminate the redundant features that we do not need and it narrows it down to several thousands of features that are very useful. The AdaBoost method will be described later in this chapter.

Integral Image

Since it is clear that huge number of rectangular haar feature need to be evaluated within a single 24 by 24 resolution based window each time, the Viola-Jones algorithm comes up with a neat technique to reduce the computation rather than summing up all the entire pixel values under the black and white rectangles every time. They have introduced the concept of integral image to find the sum of all pixels under a rectangle with just 4 corner values of the integral image.

In integral image the value at each pixel (x,y) is the sum of all the pixels above and to the left of (x,y). So in integral image we can get a pixel value by summing up all the pixels falling from the top to the left of that pixel. The following figure 3.11 shows an input image and its corresponding integral image with pixel values which are calculated after counting all the pixels from the top to the left of each pixel in the given input image.

Figure 3.11: Example of Integral Image

Integral image allows for the calculation of sum of all pixels inside any given rectangle using only four values at the corners of the rectangle. In below integral image the value of patch D is calculated by subtracting the sum of corner labeled 1 and 4 from the sum of the other diagonal which is corner 2 and 3. Now the corner 1 contains all the pixel of patch A and corner 2 is the sum of patch A and B and 3 is the sum of patch C and D and corner 4 is the sum of patch A, B, C, D respectively.

Figure 3.12: Example of integral image

AdaBoost

As stated previously that there can be approximately 160,000+ features values within a detector at 24 by 24 based resolution window which need to be calculated. But it is to be understood that only few set of features will be useful among all these features to identify a face. In the following figure, the haar feature in image A is relevant because this will be able to identify the nose region and will extract the nose feature of face. But the haar feature applied to input image B will not give any relevant information because this will not be able to extract any feature of face so this is irrelevant feature and need to be eliminated so that it will not be consider for further evaluation. Using this method we can determine relevant and irrelevant features among all these 160,000+ features. This method will select only few features which are relevant for face detection.

Figure 3.13: Relevant and Irrelevant features

Adaboost is machine learning algorithm which helps in finding only the best features among all these 160,000+ features. After these features are found a weighted combination of all these features is used in evaluating and deciding any given window has a face or not. Each of the selected features are considered okay to be included if they can at least perform better than random guessing (detect more than half the cases). These features are also called weak classifiers. Adaboost construct s strong classifier as a linear combination of these weak classifiers. The following equation shows the concept of AdaBoost method.

In the above equation 3.1, F(x) is a strong classifiers and α1f1(x), α2f2(x), α3f3(x)… are weak classifiers. A term strong classifier F(x) in the above equation can decide whether the input image contains faces while weak classifier is a relevant or irrelevant feature. The weak classifier is extracted by the Adaboost which will be combined with the corresponding weight to form a strong classifier or strong detector. The output of weak classifier is either 1 or 0; the output is 1 if it is performed well and identifies a feature when it is applied on image. In figure 3.13 a weak classifier in image A will output a binary value 1 because a haar feature applied to input image will be able to extract certain feature of face. But a weak classifier in image B will output a binary value 0 because a haar feature applied to input image will not be able to extract any certain feature of face. The combination of all these classifier together form a strong classifier, generally 2500 features are used to form a strong classifier.

Cascading

In every 24 by 24 window we need to evaluate 2,500 weak classifiers and will take the linear combination of these weak classifiers. Instead of calculating 2,500 features all the time on every single 24 by 24 window we uses a hierarchy of classifiers and uses a cascade of features out of 2,500 features to determine whether an input image contains faces. In this way we can arrange 2,500 features in a cascading structure. Using this technique we can reject any input image that doesn’t contains faces in a very less time. Consider the following figure 3.14, we have an input image and we want to detect faces in a given image. All the 2,500 features are arranged in cascading hierarchy structure. So instead of evaluating 2,500 features we split the given input image into several stages. Each stage consist of certain amount of features, the given input image will be passed across all the hierarchal stages. At each stage if there is no face in a given window, the input window is discarded. But if it may contain face then it will be confirmed by the next stages. In real time face detection application this method gives lots of advantages to reject the windows of image that do not contain faces.

Figure 3.14: Cascade Classifier.

Face Recognition

Face recognition is used to identify or verify one or more persons in the scene using a stored faces database [22]. Face recognition involves face detection, feature extraction and recognition. For the recognition problem, the input is an unknown face and the output is a report that determined identify from a database of known faces. Many methods exist to solve face recognition problem but we will use Eigenface because it is most widely used for face recognition.

Face Recognition Using Eigenface

Eigenface is a well studied method of face recognition based on principal component analysis (PCA), popularized by the seminal work of Turk & Pentland. We have implemented the eigenface algorithm using the following steps.

Faces Database

First, we needed to collect a set of face images and therefore we obtained 300 face images of 20 students. These face images become our database of known faces. We will later determine whether or not an unknown face matches any of these known faces. All face images must be the same size in pixels and for our purposes; they must be gray-scale, with values ranging from 0 to 255 and each image is uniquely indexed. Our faces database contains 20 classes where each class consists of 30 instances.

This faces database will be called later by algorithm to compare against each input image.

Steps of Eigenface Algorithm

1. The first step is to obtain a set S with M face images. Each image is transformed into a vector of size N and placed into the set.

2. The second step is to reshape all 2D image of the training database into 1D column vectors. Then put these 1D column vectors in a row to construct 2D matrix. The algorithm will reshapes all images into 1D in order to create a face space where each row represingting the image of each person in training database. The eigenface method will use PCA features to determine the most discriminating features among all images of faces space.

3. In this step we get a 2D matrix that we have created in step 2 and the algorithm will perform the following steps to extract the features.

3. In this step we will compute the mean image Ψ

In the above equation M is the number of faces in our faces database. This is done by merging all the columns into a single column and thus, adding all images columns pixel by pixel or row-by-row and then divide by M total images.

Figure 3.15: Mean Image of our faces database

4. In this step we will compute the deviation of each image from mean image

The reason to calculate the difference image is to compute a covarience matrix for our data set. The covarience between two sets reveals how much the sets correlate. In other words, the intensity relationship between the two sets can be analyzed using the covarience.

5. Compute the Eigen Vectors and Eigen faces of the Covariance matrix. For a P x Q matrix, the maximum number of non-zero Eigen values that the matrix can have is min (P-1,Q-1). Since the number of training images (P) is usually less the number of pixels (MxN), the most non-zero Eigen values that can be found are equal to (P-1). So, an Eigen value of A’*A (a P*P matrix) can be calculated instead of A’*A (a M*NxM*N matrix). Thus, it is clear that the dimensions of A*A’ is much larger than A’*A and hence the dimensionality decreases by this process. The surrogate of the covariance matrix, L = A’*A. Finally the Eigen vectors of the covariance matrix ‘C’ are obtained from the Eigen Vectors and these are called as the Eigen faces.

6. Sorting and eliminating Eigen values. All the Eigen values of matrix L are stored and those who are less than a specified threshold are eliminated. So the number of non-zero Eigen vectors may be less than (P-1). Eigen Vectors of covariance matrix ‘C’ can be obtained from L Eigen vectors.

Recognition Procedure

In recognition step, the algorithm compares two faces by projecting the images onto the face space and measures the Euclidean distance between them.

This part is executed with the following steps:

· Projecting the centered image vectors onto the face space.

· Extracting the Principal Component Analysis features from the test image.

· The Euclidean distance between the projected test image and the projection of all the centered training images are calculated. The test image is expected to have a minimum distance with its corresponding image in the training database.

Thus, the face is recognized by the proposed method accurately.

Attendance Marking

Upon recognition of students, their attendance will be marked and their attendance information will be stored in database.

Conclusion

TO obtain the attendance of students, the images of the whole class should be taken from CCTV camera. The image will be completely processed to detect the students in images using image preprocessing techniques. After the image is completely processed and the students are recognized perfectly then the detected students in an image will be compared against the faces database. Once the system recognizes the students then their appropriate attendance will be marked and stored in a database. This is a better approach to save the attendance time that could be used for teaching. The system offers portability since the devices could be taken anywhere it is scheduled. The system will avoid the proxy attendance and will generate the attendance report which could be accessed by teacher as well as the students anytime they desire. For face detection, Viola-Jones face detection algorithm is used which is very robust and has a very high detection rate, and it is a real time face detection algorithm and it must process at least two frames per second. For face recognition, Eigenface algorithm is used in feature extraction as it is not depending on facial expression, faster in computational time as well as lower sensitivity to noise.

References

[2] H. MALLIK, "AN AUTOMATED STUDENT ATTENDANCE REGISTERING SYSTEM USING FACE RECOGNITION," Bachelor, Department of Electronics and Communication Engineering, National Institute of Technology, Rourkela, 2015.

[3] PAUL VIOLA and MICHAEL JONES, "Robust Real-Time Face Detection," International Journal of Computer Vision 57(2), 137–154, 2004, 2003.

[4] M. Turk and A. Pentland, "Face recognition using eigenfaces," Proc. IEEE Conference on Computer Vision and Pattern Recognition, 1991.

[5] T Muni Reddy1, V Prasad1, N V Ramanaiah, "FACE RECOGNITION BASED ATTENDANCE MANAGEMENT SYSTEM BY USING EMBEDDED LINUX," International Journal of Engineering Research and Science & Technology, ISSN: 2319-5991, Vol. 4, No. 2, May 2015.